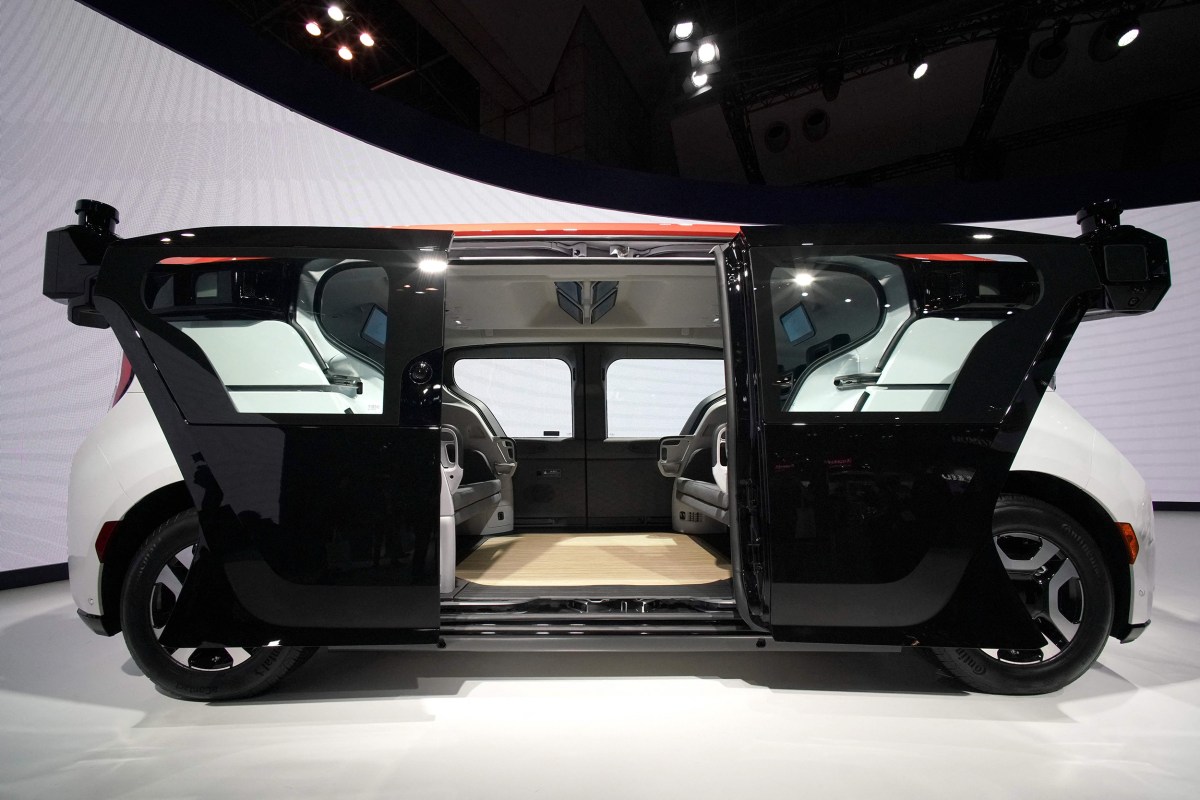

In Phoenix, Austin, Houston, Dallas, Miami, and San Francisco, hundreds of so-called autonomous vehicles, or AVs, operated by General Motors’ self-driving car division, Cruise, have for years ferried passengers to their destinations on busy city roads. Cruise’s app-hailed robot rides create a detailed picture of their surroundings through a combination of sophisticated sensors, and navigate through roadways and around obstacles with machine learning software intended to detect and avoid hazards.

AV companies hope these driverless vehicles will replace not just Uber, but also human driving as we know it. The underlying technology, however, is still half-baked and error-prone, giving rise to widespread criticisms that companies like Cruise are essentially running beta tests on public streets.

Despite the popular skepticism, Cruise insists its robots are profoundly safer than what they’re aiming to replace: cars driven by people. In an interview last month, Cruise CEO Kyle Vogt downplayed safety concerns: “Anything that we do differently than humans is being sensationalized.”

The concerns over Cruise cars came to a head this month. On October 17, the National Highway Traffic Safety Administration announced it was investigating Cruise’s nearly 600-vehicle fleet because of risks posed to other cars and pedestrians. A week later, in San Francisco, where driverless Cruise cars have shuttled passengers since 2021, the California Department of Motor Vehicles announced it was suspending the company’s driverless operations. Following a string of highly public malfunctions and accidents, the immediate cause of the order, the DMV said, was that Cruise withheld footage from a recent incident in which one of its vehicles hit a pedestrian, dragging her 20 feet down the road.

In an internal address on Slack to his employees about the suspension, Vogt stuck to his message: “Safety is at the core of everything we do here at Cruise.” Days later, the company said it would voluntarily pause fully driverless rides in Phoenix and Austin, meaning its fleet will be operating only with human supervision: a flesh-and-blood backup to the artificial intelligence.

Even before its public relations crisis of recent weeks, though, previously unreported internal materials such as chat logs show Cruise has known internally about two pressing safety issues: Driverless Cruise cars struggled to detect large holes in the road and have so much trouble recognizing children in certain scenarios that they risked hitting them. Yet, until it came under fire this month, Cruise kept its fleet of driverless taxis active, maintaining its regular reassurances of superhuman safety.

“This strikes me as deeply irresponsible at the management level to be authorizing and pursuing deployment or driverless testing, and to be publicly representing that the systems are reasonably safe,” said Bryant Walker Smith, a University of South Carolina law professor and engineer who studies automated driving.

In a statement, a spokesperson for Cruise reiterated the company’s position that a future of autonomous cars will reduce collisions and road deaths. “Our driverless operations have always performed higher than a human benchmark, and we constantly evaluate and mitigate new risks to continuously improve,” said Erik Moser, Cruise’s director of communications. “We have the lowest risk tolerance for contact with children and treat them with the highest safety priority. No vehicle — human operated or autonomous — will have zero risk of collision.”

“These are not self-driving cars. These are cars driven by their companies.”

Though AV companies enjoy a reputation in Silicon Valley as bearers of a techno-optimist transit utopia — a world of intelligent cars that never drive drunk, tired, or distracted — the internal materials reviewed by The Intercept reveal an underlying tension between potentially life-and-death engineering problems and the effort to deliver the future as quickly as possible. With its parent company General Motors, which purchased Cruise in 2016 for $1.1 billion, hemorrhaging money on the venture, any setback for the company’s robo-safety regimen could threaten its business.

Instead of seeing public accidents and internal concerns as yellow flags, Cruise sped ahead with its business plan. Before its permitting crisis in California, the company was, according to Bloomberg, exploring expansion to 11 new cities.

“These are not self-driving cars,” said Smith. “These are cars driven by their companies.”

“May Not Exercise Additional Care Around Children”

Several months ago, Vogt became choked up when talking about a 4-year-old girl who had recently been killed in San Francisco. A 71-year-old woman had taken what local residents described as a low-visibility right turn, striking a stroller and killing the child. “It barely made the news,” Vogt told the New York Times. “Sorry. I get emotional.” Vogt offered that self-driving cars would make for safer streets.

Behind the scenes, meanwhile, Cruise was grappling with its own safety issues around hitting kids with cars. One of the problems addressed in the internal, previously unreported safety assessment materials is the failure of Cruise’s autonomous vehicles to, under certain conditions, effectively detect children so that they can exercise extra caution. “Cruise AVs may not exercise additional care around children,” reads one internal safety assessment. The company’s robotic cars, it says, still “need the ability to distinguish children from adults so we can display additional caution around children.”

In particular, the materials say, Cruise worried its vehicles might drive too fast at crosswalks or near a child who could move abruptly into the street. The materials also say Cruise lacks data around kid-centric scenarios, like children suddenly separating from their accompanying adult, falling down, riding bicycles, or wearing costumes.

The materials note results from simulated tests in which a Cruise vehicle is in the vicinity of a small child. “Based on the simulation results, we can’t rule out that a fully autonomous vehicle might have struck the child,” reads one assessment. In another test drive, a Cruise vehicle successfully detected a toddler-sized dummy but still struck it with its side mirror at 28 miles per hour.

The internal materials attribute the robot cars’ inability to reliably recognize children under certain conditions to inadequate software and testing. “We have low exposure to small VRUs” — Vulnerable Road Users, a reference to children — “so very few events to estimate risk from,” the materials say. Another section concedes Cruise vehicles’ “lack of a high-precision Small VRU classifier,” or machine learning software that would automatically detect child-shaped objects around the car and maneuver accordingly. The materials say Cruise, in an attempt to compensate for machine learning shortcomings, was relying on human workers behind the scenes to manually identify children encountered by AVs where its software couldn’t do so automatically.

In its statement, Cruise said, “It is inaccurate to say that our AVs were not detecting or exercising appropriate caution around pedestrian children” — a claim undermined by internal Cruise materials reviewed by The Intercept and the company’s statement itself. In its response to The Intercept’s request for comment, Cruise went on to concede that, this past summer during simulation testing, it discovered that its vehicles sometimes temporarily lost track of children on the side of the road. The statement said the problem was fixed and only encountered during testing, not on public streets, but Cruise did not say how long the issue lasted. Cruise did not specify what changes it had implemented to mitigate the risks.

Despite Cruise’s claim that its cars are designed to identify children to treat them as special hazards, spokesperson Navideh Forghani said that the company’s driving software hadn’t failed to detect children but merely failed to classify them as children.

Moser, the Cruise spokesperson, said the company’s cars treat children as a special category of pedestrians because they can behave unpredictably. “Before we deployed any driverless vehicles on the road, we conducted rigorous testing in a simulated and closed-course environment against available industry benchmarks,” he said. “These tests showed our vehicles exceed the human benchmark with regard to the critical collision avoidance scenarios involving children.”

“Based on our latest assessment this summer,” Moser continued, “we determined from observed performance on-road, the risk of the potential collision with a child could occur once every 300 million miles at fleet driving, which we have since improved upon. There have been no on-road collisions with children.”

Do you have a tip to share about safety issues at Cruise? The Intercept welcomes whistleblowers. Use a personal device to contact Sam Biddle on Signal at +1 (978) 261-7389, by email at sam.biddle@theintercept.com, or by SecureDrop.

Cruise has known its cars couldn’t detect holes, including large construction pits with workers inside, for well over a year, according to the safety materials reviewed by The Intercept. Internal Cruise assessments claim this flaw constituted a major risk to the company’s operations. Cruise determined that at its current, relatively miniscule fleet size, one of its AVs would drive into an unoccupied open pit roughly once a year, and a construction pit with people inside it about every four years. Without fixes to the problems, those rates would presumably increase as more AVs were put on the streets.

It appears this concern wasn’t hypothetical: Video footage captured from a Cruise vehicle reviewed by The Intercept shows one self-driving car, operating in an unnamed city, driving directly up to a construction pit with multiple workers inside. Though the construction site was surrounded by orange cones, the Cruise vehicle drives directly toward it, coming to an abrupt halt. Though it can’t be discerned from the footage whether the car entered the pit or stopped at its edge, the vehicle appears to be only inches away from several workers, one of whom attempted to stop the car by waving a “SLOW” sign across its driverless windshield.

“Enhancing our AV’s ability to detect potential hazards around construction zones has been an area of focus, and over the last several years we have conducted extensive human-supervised testing and simulations resulting in continued improvements,” Moser said. “These include enhanced cone detection, full avoidance of construction zones with digging or other complex operations, and immediate enablement of the AV’s Remote Assistance support/supervision by human observers.”

Known Hazards

Cruise’s undisclosed struggles with perceiving and navigating the outside world illustrate the perils of leaning heavily on machine learning to safely transport humans. “At Cruise, you can’t have a company without AI,” the company’s artificial intelligence chief told Insider in 2021. Cruise regularly touts its AI prowess in the tech media, describing it as central to preempting road hazards. “We take a machine-learning-first approach to prediction,” a Cruise engineer wrote in 2020.

The fact that Cruise is even cataloguing and assessing its safety risks is a positive sign, said Phil Koopman, an engineering professor at Carnegie Mellon, emphasizing that the safety issues that worried Cruise internally have been known to the field of autonomous robotics for decades. Koopman, who has a long career working on AV safety, faulted the data-driven culture of machine learning that leads tech companies to contemplate hazards only after they’ve encountered them, rather than before. The fact that robots have difficulty detecting “negative obstacles” — AV jargon for a hole — is nothing new.

“Safety is about the bad day, not the good day, and it only takes one bad day.”

“They should have had that hazard on their hazard list from day one,” Koopman said. “If you were only training it how to handle things you’ve already seen, there’s an infinite supply of things that you won’t see until it happens to your car. And so machine learning is fundamentally poorly suited to safety for this reason.”

The safety materials from Cruise raise an uncomfortable question for the company about whether robot cars should be on the road if it’s known they might drive into a hole or a child.

“If you can’t see kids, it’s very hard for you to accept that not being high risk — no matter how infrequent you think it’s going to happen,” Koopman explained. “Because history shows us people almost always underestimate the risk of high severity because they’re too optimistic. Safety is about the bad day, not the good day, and it only takes one bad day.”

Koopman said the answer rests largely on what steps, if any, Cruise has taken to mitigate that risk. According to one safety memo, Cruise began operating fewer driverless cars during daytime hours to avoid encountering children, a move it deemed effective at mitigating the overall risk without fixing the underlying technical problem. In August, Cruise announced the cuts to daytime ride operations in San Francisco but made no mention of its attempt to lower risk to local children. (“Risk mitigation measures incorporate more than AV behavior, and include operational measures like alternative routing and avoidance areas, daytime or nighttime deployment and fleet reductions among other solutions,” said Moser. “Materials viewed by The Intercept may not reflect the full scope of our evaluation and mitigation measures for a specific situation.”)

A quick fix like shifting hours of operation presents an engineering paradox: How can the company be so sure it’s avoiding a thing it concedes it can’t always see? “You kind of can’t,” said Koopman, “and that may be a Catch-22, but they’re the ones who decided to deploy in San Francisco.”

“The reason you remove safety drivers is for publicity and optics and investor confidence.”

Precautions like reduced daytime operations will only lower the chance that a Cruise AV will have a dangerous encounter with a child, not eliminate that possibility. In a large American city, where it’s next to impossible to run a taxi business that will never need to drive anywhere a child might possibly appear, Koopman argues Cruise should have kept safety drivers in place while it knew this flaw persisted. “The reason you remove safety drivers is for publicity and optics and investor confidence,” he told The Intercept.

Koopman also noted that there’s not always linear progress in fixing safety issues. In the course of trying to fine-tune its navigation, Cruise’s simulated tests showed its AV software missed children at an increased rate, despite attempts to fix the issues, according to materials reviewed by The Intercept.

The two larger issues of kids and holes weren’t the only robot flaws potentially imperiling nearby humans. According to other internal materials, some vehicles in the company’s fleet suddenly began making unprotected left turns at intersections, something Cruise cars are supposed to be forbidden from attempting. The potentially dangerous maneuvers were chalked up to a botched software update.

The Future of Road Safety?

Part of the self-driving industry’s techno-libertarian promise to society — and a large part of how it justifies beta-testing its robots on public roads — is the claim that someday, eventually, streets dominated by robot drivers will be safer than their flesh-based predecessors.

Cruise cited a RAND Corporation study to make its case. “It projected deploying AVs that are on average ten percent safer than the average human driver could prevent 600,000 fatalities in the United States over 35 years,” wrote Vice President for Safety Louise Zhang in a company blog post. “Based on our first million driverless miles of operation, it appears we are on track to far exceed this projected safety benefit.”

During General Motors’ quarterly earnings call — the same day California suspended Cruise’s operating permit — CEO Mary Barra told financial analysts that Cruise “is safer than a human driver and is constantly improving and getting better.”

In the 2022 “Cruise Safety Report,” the company outlines a deeply unflattering comparison of fallible human drivers to hyper-intelligent robot cars. The report pointed out that driver distraction was responsible for more than 3,000 traffic fatalities in 2020, whereas “Cruise AVs cannot be distracted.” Crucially, the report claims, a “Cruise AV only operates in conditions that it is designed to handle.”

“It’s I think especially egregious to be making the argument that Cruise’s safety record is better than a human driver.”

When it comes to hitting kids, however, internal materials indicate the company’s machines were struggling to match the safety performance of even an average human: Cruise’s goal was, at the time, for its robots to merely drive as safely around children at the same rate as an average Uber driver — a goal the internal materials note it was failing to meet.

“It’s I think especially egregious to be making the argument that Cruise’s safety record is better than a human driver,” said Smith, the University of South Carolina law professor. “It’s pretty striking that there’s a memo that says we could hit more kids than an average rideshare driver, and the apparent response of management is, keep going.”

In a statement to The Intercept, Cruise confirmed its goal of performing better than ride-hail drivers. “Cruise always strives to go beyond existing safety benchmarks, continuing to raise our own internal standards while we collaborate with regulators to define industry standards,” said Moser. “Our safety approach combines a focus on better-than-human behavior in collision imminent situations, and expands to predictions and behaviors to proactively avoid scenarios with risk of collision.”

Cruise and its competitors have worked hard to keep going despite safety concerns, public and nonpublic. Before the California Public Utilities Commission voted to allow Cruise to offer driverless rides in San Francisco, where Cruise is headquartered, the city’s public safety and traffic agencies lobbied for a slower, more cautious approach to AVs. The commission didn’t agree with the agencies’ worries. “While we do not yet have the data to judge AVs against the standard human drivers are setting, I do believe in the potential of this technology to increase safety on the roadway,” said commissioner John Reynolds, who previously worked as a lawyer for Cruise.

Had there always been human safety drivers accompanying all robot rides — which California regulators let Cruise ditch in 2021 — Smith said there would be less cause for alarm. A human behind the wheel could, for example, intervene to quickly steer a Cruise AV out of the path of a child or construction crew that the robot failed to detect. Though the company has put them back in place for now, dispensing entirely with human backups is ultimately crucial to Cruise’s long-term business, part of its pitch to the public that steering wheels will become a relic. With the wheel still there and a human behind it, Cruise would struggle to tout its technology as groundbreaking.

“We’re not in a world of testing with in-vehicle safety drivers, we’re in a world of testing through deployment without this level of backup and with a whole lot of public decisions and claims that are in pretty stark contrast to this,” Smith explained. “Any time that you’re faced with imposing a risk that is greater than would otherwise exist and you’re opting not to provide a human safety driver, that strikes me as pretty indefensible.”